Distributed Data Storage Cloud: Complete Guide for 2026

Outline

– Section 1: Introduction and the State of Distributed Data in 2026

– Section 2: Storage Architectures—Block, File, Object, and Data Protection

– Section 3: Cloud Foundations—Regions, Durability, and Data Locality

– Section 4: Performance Playbook—Latency, Consistency, and Cost-Aware Speed

– Section 5: Conclusion—Practical Roadmap and Decision Checklist

Introduction and the State of Distributed Data in 2026

Distributed data is no longer a niche design choice; it is the default reality for organizations that span time zones, devices, and regulations. In 2026, analytics platforms ingest events from billions of sensors, transactional systems scale across continents, and teams expect near-instant answers. The reason is simple: value tends to hide at the intersections—between services, datasets, and users. A distributed approach turns those intersections into discoverable paths, but it also introduces complexity that demands discipline. Understanding the landscape—what to store, where, and how to access it—beats any single tool or buzzword.

At the heart of distributed data are a few durable ideas. The CAP trade-off reminds us that, during network partitions, you maintain either strong consistency or availability; real systems tune for different points on that spectrum. Consistency models matter: strong, eventual, bounded staleness, and causal consistency exist to match business needs. For example, a global catalog can accept eventual consistency for product views, while a payment ledger typically requires strong guarantees. Data models are equally diverse: relational tables excel at structured, transactional workloads; key-value stores shine for high-throughput lookups; document stores fit evolving schemas; wide-column systems handle time-series and logs; graphs capture relationships such as recommendations and dependencies.

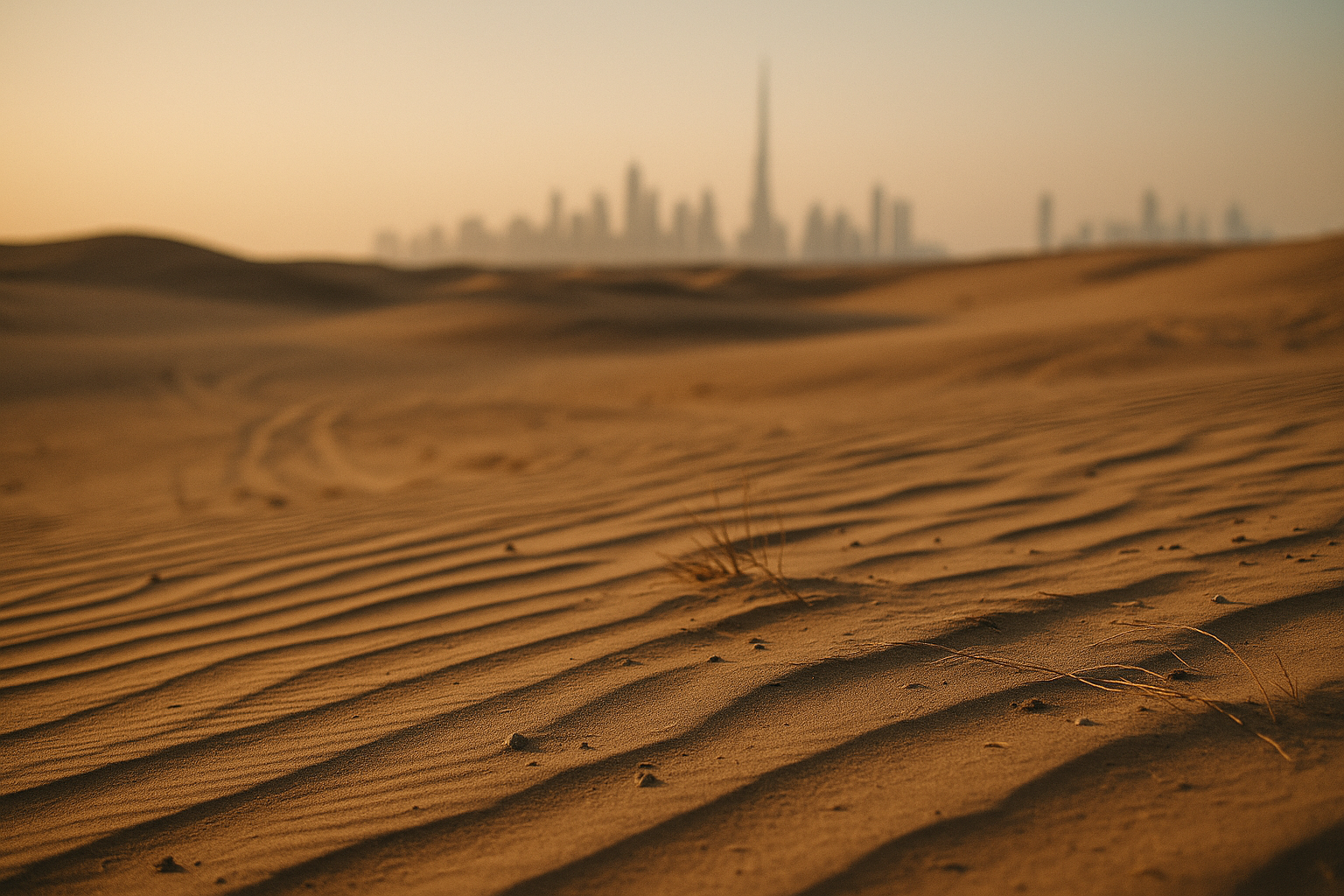

Why this matters now: data volumes grow roughly on exponential curves, but budgets and latency expectations do not. Cross-ocean round trips often sit around 150–200 ms; even the speed of light becomes a bottleneck at scale. Meanwhile, privacy laws and data-sovereignty requirements shape where bytes can live. As a result, architecture decisions revolve around splitting reads and writes intelligently, placing data close to where it’s used, and encoding failure as a normal operating mode. Think of your platform as a city: roads (networks), neighborhoods (regions), zoning laws (compliance), and public services (platform teams). With that map in mind, the rest of this guide focuses on making practical, durable choices for storage and cloud strategy.

Consider these early signals that you need a distributed design: – Users in multiple continents complain about latency peaks during traffic surges. – Data refresh SLAs slip because pipelines wait on a single region or cluster. – Compliance requires certain records to remain in specific jurisdictions. – Storage costs creep up without a clear view of hot versus cold data. If more than one applies, a structured, distributed approach is likely overdue.

Storage Architectures—Block, File, Object, and Data Protection

Choosing storage is like choosing the foundation for a building: the walls, windows, and wiring depend on it. Three canonical interfaces dominate: block, file, and object. Block storage presents raw volumes to operating systems—great for databases that expect local disks and low-latency I/O. File storage exposes a hierarchical namespace and shared semantics, useful for analytics teams, ML feature stores, or legacy applications that depend on POSIX-like operations. Object storage treats data as immutable objects addressed by keys; it scales linearly, supports versioning, and pairs well with content delivery and lakehouse-style analytics. In practice, platforms combine them: transactional tables on block, working sets and collaboration on file, and archival or large datasets on object.

Hardware matters too. HDDs offer lower cost per TB and are appropriate for sequential reads and deep archives. SSDs and NVMe drives deliver low latency and high IOPS for hot paths like indexes and write-ahead logs. A common tiering pattern keeps: – Hot data on NVMe or high-performance SSDs for sub-millisecond access. – Warm data on general SSD or fast HDD for minutes-to-hours lookups. – Cold data on high-capacity HDD or archive tiers for infrequent access. Lifecycle policies can migrate objects across tiers automatically, trading pennies for performance where it counts.

Protecting data is non-negotiable. Replication (e.g., 3× copies) is simple and fast to repair, but it expands storage costs by the replication factor. Erasure coding slices data into fragments plus parity (for example, 10 data + 4 parity), achieving comparable durability with lower overhead—often around 1.3–1.6× instead of 3×—at the expense of more CPU and network during rebuilds. Many platforms combine both: replication for hot writes and small objects; erasure coding for large, relatively static blobs. Versioning plus immutability policies help defend against accidental deletes and ransomware, while audit logs provide traceability without naming specific vendors.

Durability and availability targets should be explicit. Object stores commonly advertise double-digit “nines” of durability (for example, 99.999999999%), achieved through distributing fragments across failure domains. Availability—how often the system is reachable—tends to be lower than durability and depends on zones, routing, and maintenance windows. Backup strategy remains essential even with high durability. A pragmatic 3-2-1 rule—three copies, on two media types, with one offline or in a separate region—reduces correlated risk. Encryption in transit and at rest is table stakes, but key management and rotation schedules are what keep that promise intact. Add data classification to avoid overprotecting trivial assets and under-protecting crown jewels; it also clarifies which datasets deserve SSD budgets or archival cold tiers.

Cost modeling should be tied to access patterns. Track read/write ratios, average object size, and retrieval frequency. Small-object workloads benefit from compaction or bundling to avoid overhead. Large sequential reads align well with erasure-coded archives. Where possible, push computation to data—query-in-place on object stores or storage-attached processing—so you move fewer bytes across the network. These trade-offs are not one-time decisions; revisit them quarterly as workloads and prices shift.

Cloud Foundations—Regions, Durability, and Data Locality

The cloud is a mesh of regions and zones stitched together by high-speed networks and control planes. A region contains multiple isolated zones; building across at least two zones reduces the blast radius of failures like power, cooling, or top-of-rack switching incidents. Cross-region replication protects against rare but consequential events. Think in layers: application tier, data tier, and control plane services (identity, policy, monitoring). A healthy design treats each layer as independently recoverable, with periodic game days that verify failover runbooks actually work.

Durability and locality shape one another. Placing replicas in different zones increases durability and availability, but write latency rises with distance. A common pattern is to designate a primary region for writes and allow read replicas in other regions. For multi-active designs, conflict resolution becomes a first-class concern: last-write-wins is simple but can drop updates; vector clocks or CRDT-like strategies maintain convergence at higher complexity. Map these choices to user expectations: collaborative editing can accept slight staleness if intent is preserved; financial postings usually cannot.

Data sovereignty and privacy laws increasingly govern placement. Some records must remain within a country or union; audits may require evidence of residency and access controls. Practical steps include: – Tagging datasets with residency and sensitivity labels. – Using per-region encryption keys with restricted operations. – Isolating pipelines so that only aggregates leave restricted regions. – Routing traffic based on user jurisdiction, not just network latency. Documenting these controls is as important as implementing them, especially for certifications and third-party assessments.

Networking economics deserve more attention than they often get. In many clouds, moving data out of a region costs more than moving it in, and cross-region replication has a predictable monthly bill. Caching reduces egress by serving hot objects near users. Compression and columnar formats shrink payloads for analytics. Co-locating compute with storage avoids “data gravity” friction, where datasets become too large to move promptly. Multi-cloud strategies can increase resilience and negotiation leverage, but they also multiply surface area: IAM policies, observability, and incident procedures must be normalized to stay sane. Hybrid models—edge locations for low-latency reads, centralized regions for writes and analytics—offer a workable middle path when well-instrumented.

Finally, practice shared responsibility with clarity. The provider keeps the infrastructure healthy, but you own data integrity, access policies, and recovery posture. Track recovery point objective (RPO) and recovery time objective (RTO) per workload. For example, a customer-facing catalog might target RPO 5 minutes and RTO 15 minutes with cross-zone failover and asynchronous replication; a ledger aims closer to zero with synchronous writes in two zones. The right mix is the one that turns business tolerance for loss and downtime into explicit, measured objectives.

Performance Playbook—Latency, Consistency, and Cost-Aware Speed

Performance in distributed systems is a budgeting exercise. You start with a fixed allowance—physics, queues, CPU—and decide where to spend it. Break end-to-end latency into legs: client to edge, edge to region, service to database, and back again. Track p50, p95, and p99; the long tail is where customer perception is formed. A practical target might read p99 under 400 ms for global read paths and under 200 ms regionally, with clear degradation behavior when caches miss or replicas lag. Timeouts and retries should be explicit, jittered, and backpressured so they do not amplify partial failures.

Consistency settings directly influence throughput and latency. Strong consistency across zones adds write latency roughly equal to the second hop’s round trip, which can be tens of milliseconds. Eventual consistency can cut tail latencies and improve throughput, particularly for read-heavy workloads. Techniques like read-your-writes in a session, quorum reads, or bounded staleness balance user expectations with performance. For mixed workloads, consider CQRS-style separation: write-optimized paths capture intent quickly, while read-optimized views update asynchronously. This can keep user flows snappy while preserving correctness on the authoritative side.

Caching is both a friend and a liability. Warm caches deliver delightful speeds; cold caches reveal the true backbone. Place caches at edges for static and semi-dynamic assets; use request coalescing to prevent thundering herds. For data platforms, materialized views and precomputed aggregates convert repeated queries into constant-time lookups. Where applicable, bloom filters, skip indexes, or approximate algorithms (e.g., HyperLogLog-like cardinality estimates) trade small errors for major performance wins in analytics. Measure hit ratios, eviction rates, and staleness windows to keep benefits honest.

Storage formats and access patterns matter. Columnar layouts suit analytical scans by touching only needed columns and compressing well; row-oriented layouts favor transactional updates. Small writes can be batched, coalesced, or logged sequentially before compaction to friendly sizes. For object storage, keep objects large enough to amortize per-request overhead but small enough for parallel retrieval. Parallelism pays until it fights with backend limits; add rate limiting and circuit breakers to stay within SLOs. Observe queue depths, CPU utilization, and GC pauses—queues should hover in a stable, low-variance range rather than oscillating.

Costs belong in the same dashboard as latency. A request that is 20 ms faster but costs 5× more may not be worth it. Track: – Egress per endpoint. – Storage per tier and replication mode. – Compute hours by workload phase. – Cost per thousand requests. With this visibility, you can try targeted experiments: enable stronger consistency only where it changes outcomes, move the hottest 5% of keys to SSD-backed stores, or prefetch objects during off-peak windows. The result is not just speed; it is speed you can sustain without surprise bills.

Conclusion—Practical Roadmap and Decision Checklist

The aim of this guide is to give architects, data leaders, and engineers a grounded way to navigate distributed data, storage, and cloud choices in 2026. The through-line is intentional trade-offs. You are not seeking a universal solution; you are aligning consistency, durability, latency, and cost with what your users and regulators actually demand. Start by mapping workflows to service-level objectives, then choose storage interfaces and cloud topologies that satisfy those objectives with room to grow.

A concise checklist to turn plans into action: – Define per-workload RPO/RTO, target p95/p99, and acceptable staleness. – Classify data by sensitivity, residency, and access frequency. – Choose storage by access pattern: block for transactional cores, file for shared work, object for scale and archives. – Decide on protection: replication for hot paths, erasure coding for large, colder data, plus versioning and immutability. – Place data near users while respecting sovereignty; document controls. – Build across at least two zones, and test failover quarterly. – Instrument cost per request and storage per tier; set guardrails. – Add caches with explicit staleness policies; measure hit ratios. – Practice incident game days; refine runbooks and escalation paths.

For teams evolving existing platforms, incremental wins compound. Begin with observability: capture latency histograms, queue depths, error budgets, and cost metrics. Next, introduce lifecycle policies for object data and adjust replication factors where appropriate. Move the hottest datasets to faster tiers and co-locate compute. Tune consistency settings for the top three endpoints by traffic. If you must adopt multi-cloud, start by harmonizing identity and logging; scattered control planes create confusion when minutes matter.

Looking ahead, data will continue to fragment across edges, devices, and regions, but well-chosen patterns tame that sprawl. Durable storage with clear protection semantics, cloud regions planned like a transit map, and performance tuned with a budgeter’s eye—that combination keeps platforms responsive and resilient. Treat architecture documents as living artifacts, and let runbooks and dashboards tell you when to pivot. With those habits, your systems will not only scale; they will adapt gracefully to the next wave of requirements without forcing a costly rebuild.