Software Body Construction: Complete Guide for 2026

Outline

– Section 1 — The Software Body of Construction: Concept and Context

– Section 2 — The Core Stack: Modules, Data, and Integration Patterns

– Section 3 — Implementation Blueprint: People, Process, Technology

– Section 4 — Field‑Proven Workflows: From Bid to Handover

– Section 5 — Conclusion and 2026 Roadmap

The Software Body of Construction: Concept and Context

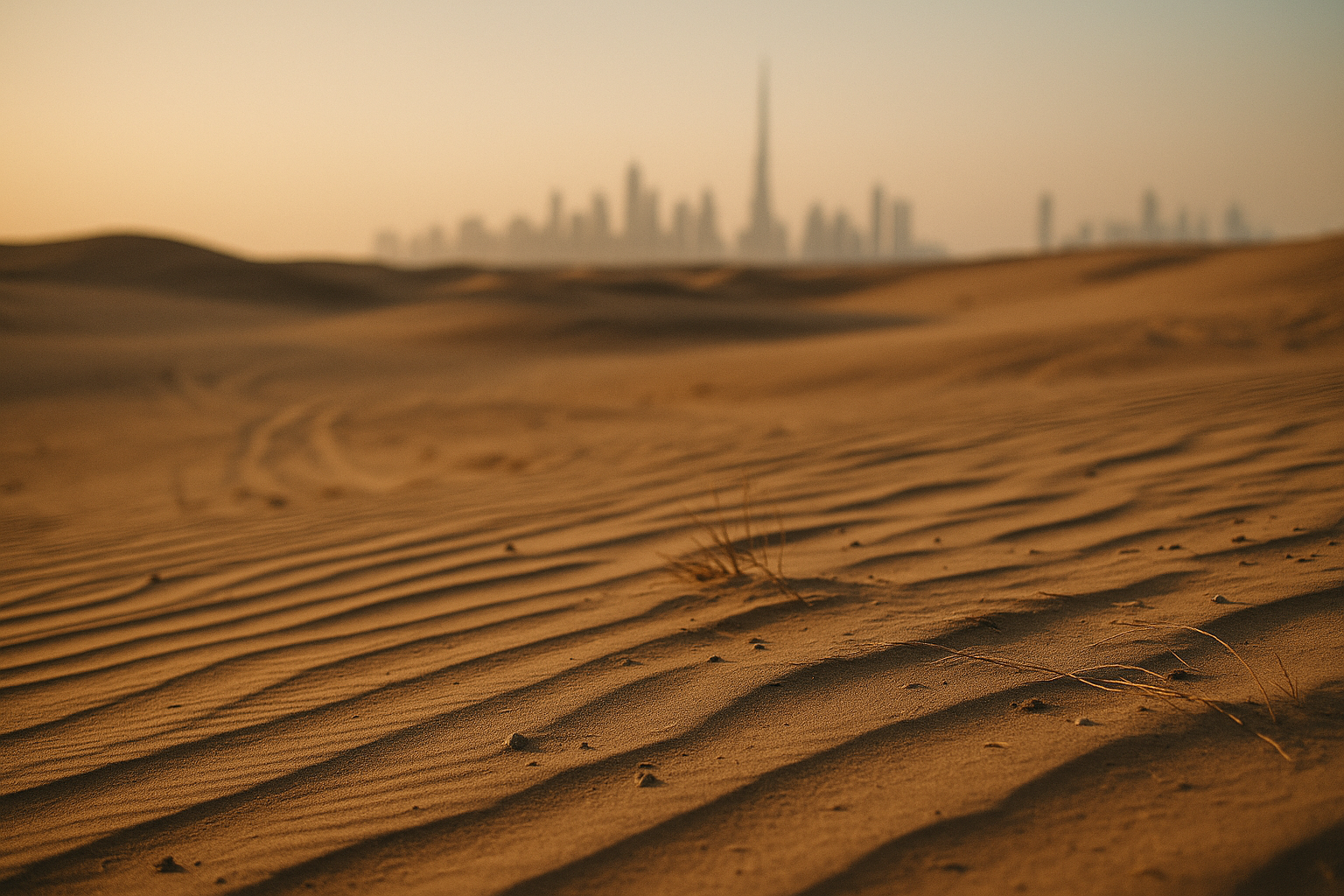

Imagine a building rising floor by floor: a skeleton of steel, muscles of cable and concrete, nerves humming with signals. A modern construction enterprise has a similar “software body.” Its skeleton is the overall architecture; its organs are core applications; its muscles are automations that move work forward; its nerves are integrations and alerts; its skin is the interface crews actually touch on site. When this body is healthy—aligned, nourished by accurate data, trained through repeatable processes—projects move with coordinated strength rather than lurching from issue to issue.

Why this matters is both practical and measurable. Complex projects routinely face schedule overruns and rework that eats margins. Industry reports over the last decade have repeatedly noted double‑digit delays on large builds and meaningful cost impact tied to late design changes or poorly controlled information. A coherent software body reduces the “distance” between intent and action: RFIs flow to the right reviewers, takeoffs translate cleanly into budgets, schedules update when quantities shift, and field issues feed back into design with traceable context.

Key components illustrate how the body functions together rather than as isolated parts:

– Planning and design coordination: model exchange, drawing control, clash tracking, and structured comment threads.

– Preconstruction: quantity takeoff, cost libraries, scenario budgeting, and risk registers connected to assumptions.

– Delivery: scheduling, resource leveling, daily logs, safety observations, quality inspections, RFIs, and submittal routing.

– Commercial controls: commitments, progress claims, change events, and earned value tracking.

– Closeout and operations: as‑built data sets, manuals, asset registers, and warranty tracking.

The central argument is integration over accumulation. A pile of disconnected tools can create more friction than paper. By aligning modules to shared identifiers (projects, locations, cost codes, assets), setting naming conventions, and defining clear state transitions (draft, submitted, approved, superseded), organizations compress decision cycles. Even modest gains compound: shaving minutes off each inspection, batching approvals in predictable windows, and reconciling quantities weekly rather than monthly. The net effect is less guesswork, fewer surprises, and a calmer jobsite rhythm that feels more like a well‑tuned engine than a series of emergencies.

The Core Stack: Modules, Data, and Integration Patterns

The construction software stack is often depicted as layers, but it behaves more like a mesh where information routes contextually. Start with data standards and work upward. Models, drawings, and asset data benefit from open, documented schemas that preserve geometry, classifications, and properties across systems. Structured handover templates ensure that what is captured in design or field verification becomes searchable knowledge later. Above the data foundation live the engines of cost, schedule, and quality—each a specialist, yet cross‑referencing shared entities and codes.

Typical modules and their responsibilities include:

– Building information modeling and coordination: reference models, issue viewpoints, and change history tied to locations and systems.

– Estimating and procurement: takeoff rules, cost assemblies, supplier comparisons, and lead time tracking that feed the master schedule.

– Scheduling: logic ties, buffers, and resource calendars that sync with daily plans and crew assignments.

– Field management: checklists, photos, and notes bound to locations and assets with timestamps and responsible parties.

– Commercial controls: commitments, change events, and payment workflows aligned with earned value and cash forecasts.

Integration patterns determine how smoothly the body moves. Event‑driven updates (webhooks, message queues) propagate changes immediately without brittle polling. Batch extract‑transform‑load moves heavy data at defined intervals for reporting and compliance. On the analytics side, a warehouse or lakehouse collates operational feeds into curated marts for cost, schedule, safety, and quality. A stable integration fabric typically includes:

– Canonical IDs and dictionaries for projects, cost codes, disciplines, and locations.

– Versioning and audit trails to preserve who changed what and when.

– Idempotent endpoints to avoid duplicates during retries.

– Clear contracts for error handling and fallbacks when a service is unavailable.

Architecture choices matter. Cloud deployment offers elastic capacity and reduces onsite maintenance, while on‑premises can satisfy stringent data residency or offline requirements. Hybrid models are common: sensitive archives and heavy compute on private infrastructure, collaboration and mobile sync in the cloud. Evaluate trade‑offs through the lens of latency (crew wait time), resilience (work continuity during outages), and portability (ability to change components without rewriting everything). The aim is a stack that behaves like a single, reliable system even though it is composed of many parts.

Implementation Blueprint: People, Process, Technology

Transformation succeeds when it addresses habits as well as hardware. A practical blueprint starts with discovery: map current workflows, name every document that drives a decision, and sketch the handoffs that create delays. This baseline becomes your improvement canvas. Next, align objectives to business outcomes—fewer RFIs per hundred drawings, faster submittal cycles, higher first‑time pass rates on inspections, tighter variance between planned and actual labor. Objectives should be measurable, time‑bound, and owned by named roles.

Rollout proceeds in phases that build confidence rather than betting the project on a single leap:

– Phase 0: governance and data standards agreed, including naming, locations, codes, and status states.

– Phase 1: pilot a high‑leverage workflow (for example, RFIs and drawings) on a willing project with active leadership support.

– Phase 2: expand to scheduling and field inspections, integrating photo evidence and locations.

– Phase 3: connect cost control and change management with clear approval thresholds.

– Phase 4: institutionalize training, playbooks, and periodic audits to prevent drift.

Quantifying value clarifies priorities. Consider a simple scenario: a crew lead spends 15 minutes daily compiling a report; 40 leads across 220 working days equals roughly 2,200 hours annually. If streamlined capture and auto‑population cut that by half, the reclaimed time approaches 1,100 hours. Apply similar reasoning to submittal cycles (days saved per package), punch list churn (issues resolved per week), and drawing supersession (errors avoided due to automatic alerts). While results vary by project complexity, modeling a few representative processes helps secure buy‑in and guide sequencing.

People and change management are the multiplier. Appoint process owners who steward templates, checklists, and naming conventions. Pair training with immediate practice: micro‑lessons before a shift, then use the feature on that shift. Recognize good behaviors—clear photos, precise locations, timely closeouts—and publish short wins across the organization. Safeguards matter too: role‑based access to reduce noise, mobile device management to protect data, and backup plans for offline capture during outages. The guiding principle is humane rigor: make the right action the easy one, and the easy one becomes the norm.

Field‑Proven Workflows: From Bid to Handover

Preconstruction sets the tone. Start with a disciplined takeoff process that ties quantities to locations and work packages, then roll scenarios that test design options against cost, schedule, and risk. When procurement is integrated, long‑lead items become schedule constraints rather than late surprises. As planning matures, a 4D perspective—time layered onto models or location plans—exposes clashes between trades and space conflicts for staging, laydown, or crane swings. This alignment means the first day on site begins with a shared playbook rather than a stack of assumptions.

Delivery is where momentum is made or lost, so workflows must be decisive and visible:

– Daily planning with last‑planner style commitments, linked to resource calendars and short‑interval schedules.

– RFI routing with service‑level expectations and templated fields that eliminate vague questions.

– Quality and safety checklists bound to locations and assets, with photo evidence and tags to enable trend analysis.

– Progress capture via quantities installed by location, feeding earned value and forecast curves.

– Change events that reference original assumptions, making cause and effect auditable.

Digital versus paper is not merely convenience; it is precision. A photo anchored to gridlines and elevation beats a paragraph of guesses. An inspection driven by a template with acceptance criteria raises first‑time pass rates. Offline capture with seamless sync protects continuity when coverage fades, while geotagging and timestamps improve accountability without adding burden. For teams skeptical of new tools, run side‑by‑side sprints: two weeks with legacy steps, two weeks with the redesigned workflow. Compare cycle times, error counts, and rework. Let evidence—not opinion—steer the decision.

Closeout rewards steady discipline throughout. If submittals, changes, and field verifications are consistently tagged to systems and assets, assembling as‑built records becomes curation instead of a scavenger hunt. Deliver structured datasets alongside manuals so operators can search by location or asset, not leaf through binders. The difference shows up years later when maintenance teams find what they need in minutes and lifecycle costs shrink. A thoughtful finish is also an honest start for the next project, because lessons learned roll into updated templates, checklists, and training materials.

Conclusion and 2026 Roadmap

The horizon is bright and pragmatic. Intelligence is moving closer to the edge: models that suggest schedule buffers based on past performance, computer vision that flags safety risks from site imagery, and automated checks that validate submittals against design intent. Reality capture is normalizing continuous verification, shrinking the gap between planned and installed. Sustainability metrics are moving from slide decks into daily dashboards as teams track embodied impacts at the package level. Prefabrication and modular practices are pushing more work into controlled environments, where data discipline pays off even faster.

For different audiences, the moves are concrete:

– Owners and developers: require structured data deliverables by location, system, and asset; align payment milestones to verified progress; standardize handover schemas.

– General contractors: appoint process owners, adopt a shared dictionary, and prioritize two or three high‑leverage workflows for enterprise rollout.

– Specialty contractors: digitize the core (takeoff, field capture, production tracking), then integrate with schedule and change events to protect margins.

– Designers and coordinators: publish models and drawings with consistent classifications; track and resolve coordination issues with traceable viewpoints.

An actionable 12‑month path looks like this: quarter one establishes governance, dictionaries, and pilot selection. Quarter two proves value on a live project and documents playbooks. Quarter three expands into cost or schedule integration and formalizes training. Quarter four audits adoption, tunes templates, and locks in metrics as standard reporting. Keep the feedback loop tight: monthly reviews on cycle times, first‑time pass rates, and variance between plan and actual. Treat the software body like a living system—exercise it, measure it, and refine it.

The message for 2026 is simple and grounded. Teams that invest in a coherent software body gain predictable delivery, clearer accountability, and calmer projects. This is not about chasing novelty; it is about designing a durable digital backbone that helps people make better decisions faster. Start with standards, pick focused wins, and let results compound. The buildings you deliver will stand taller when your information does too.